Description

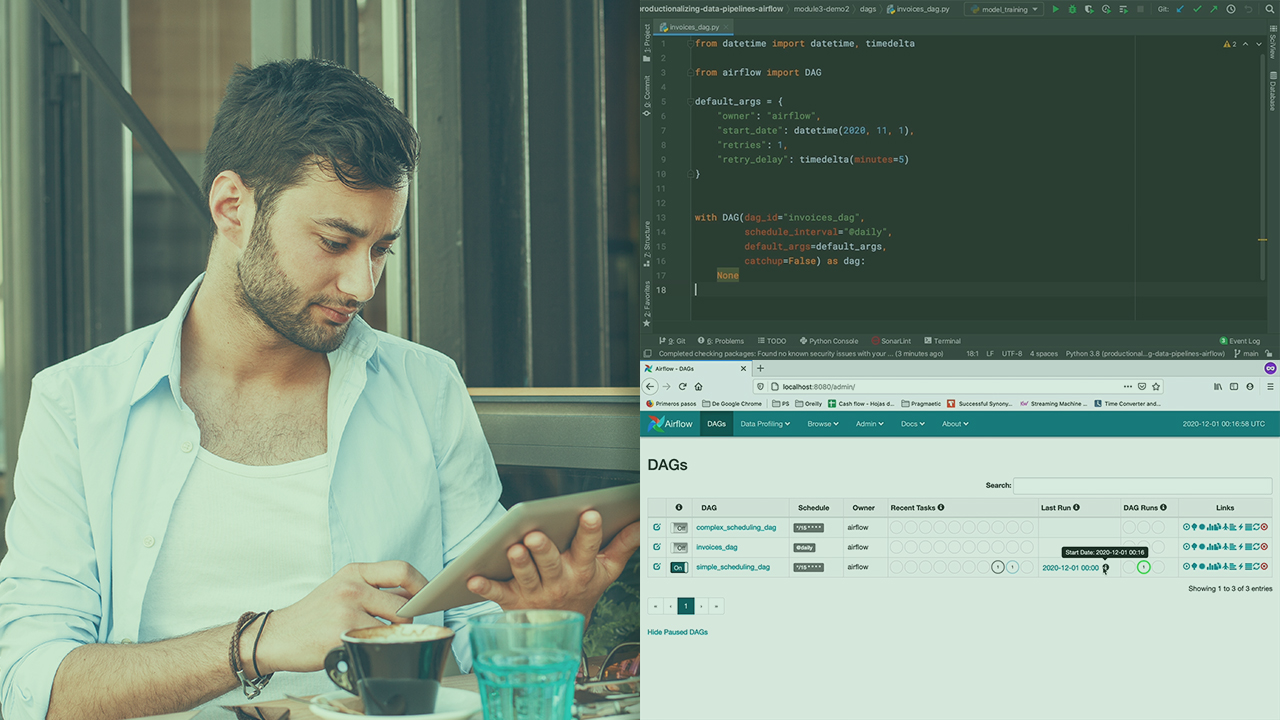

This course is all about learning how to secure Airflow with authentication and crypto and scale it with the help of Kubernetes. This course talks about the best practices to be kept in mind for Apache Airflow and briefs you about the advanced and core concepts and the limitations of it. It starts by explaining the code production process and later teaches the techniques to master time zones, unit testing and catch up. By the end of this course, you will have hands-on experience while organising the DAG folder and keeping it clean.

Topics Covered:

- Environment: Start by developing the environment for Apache Airflow and stay up to date with its features.

- Basics Of Apache: Come across the basics of Apache Airflow by installing it and getting a quick tour of it.

- Core Components: Learn how Airflow works and understand its limitations and the concept of data orchestration.

- DAG: Learn how to create the DAG with the DAG decorator and start authoring DAGs with Taskflow.

- API Availability: Know how you can check the API availability with the help of Sensor Decorator and start determining the stock prices.

- And Many More Topics..

Who Will Benefit?

- Data Engineers: All those who want to learn how to build and monitor workflows with the help of Apache Airflow.

- ETL Developers: Individuals who are working on pipelines, extractions and other automation tasks in Apache Airflow.

- Data Scientists: Individuals who want to learn how to streamline the data collection process for better performing tasks and analysis.

- Students Of Data Engineering: Individuals who want to have hands-on experience related to the limitations and fundamentals of Airflow.

Why Choose This Course?

As you choose this course, you will learn how to thoroughly organise the DAG folder by keeping it clean. You will also learn in detail the entire code production process and the unit testing process. This course benefits you by teaching you the best practices which are to be kept in mind for Apache Airflow. By the end of this course, you will thoroughly learn how to set up the monitoring with Elasticsearch and Grafana.