Description

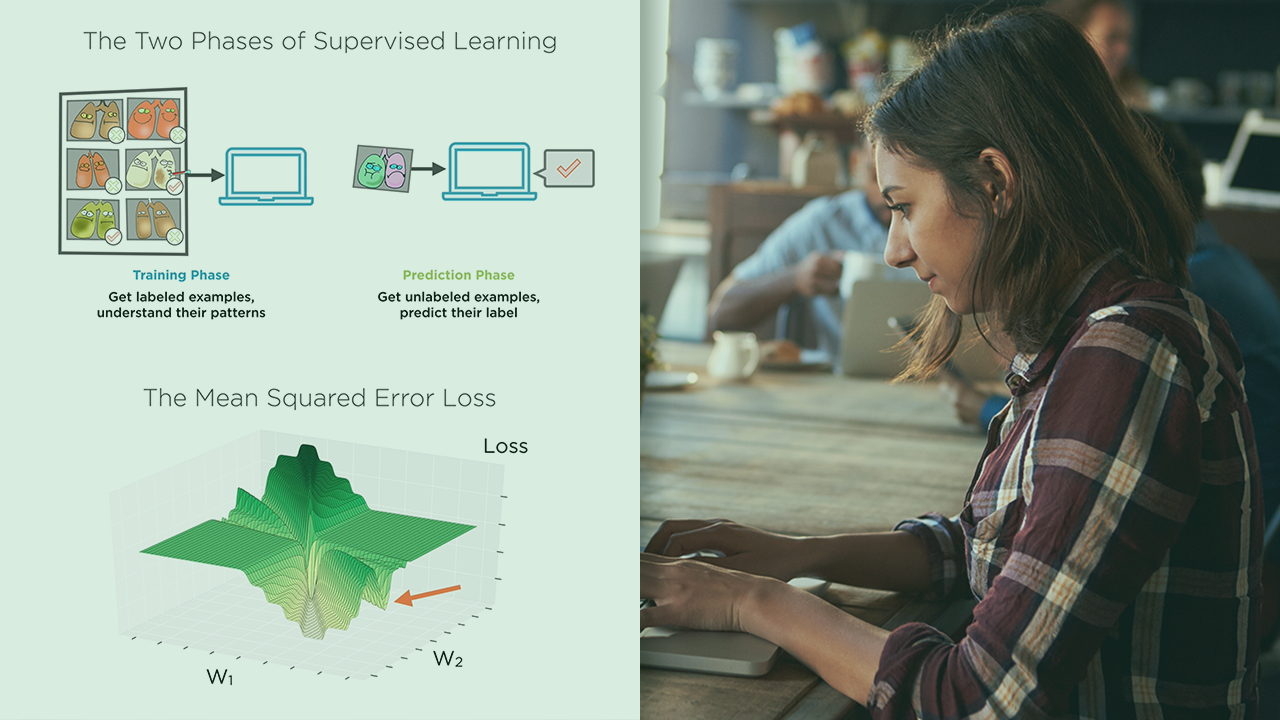

In this course you’ll study ways to combine models like decision trees and logistic regression to build models that can reach much higher ac-curacies than the base models they are made of.

In particular, we will study the Random Forest and AdaBoost algorithms in detail.

To motivate our discussion, you will learn about an important topic in statistical learning, the bias-variance trade-off. You will then study the bootstrap technique and bagging as methods for reducing both bias and variance simultaneously.

You’ll do plenty of experiments and use these algorithms on real datasets so you can see first-hand how powerful they are.

Since deep learning is so popular these days, you will study some interesting commonalities between random forests, AdaBoost, and deep learning neural networks.